While AI learns, threats adapt,

stay one step ahead with

Astra's AI pentesting

Hacker-style pentests and continuous vulnerability scanning for LLMs, AI apps, ML pipelines, and MCPs,

delivered as comprehensive AI pentesting services on one platform.

6-figure leaks. $100M stock crashes.

AI’s threat surface is real

$100M

Vanished from Alphabet’s market value after Google Bard messed up a simple fact about a telescope during a live demo

$76,000

SUV sold for $1 after a dealership’s AI chatbot was tricked into honoring a fake deal

1.2%

Of ChatGPT Plus users had their data-chats, names, even payment info exposed during OpenAI’s 2023 security breach

100,000+

Users had their private conversations leaked after an open-source LLM went live without strong deployment standard

$4.5M

Fine paid by a company for using sensitive data to train LLMs without consent reply

$1M+

In data losses hit Amazon after confidential info may have trained ChatGPT

$100M

Vanished from Alphabet’s market value after Google Bard messed up a simple fact about a telescope during a live demo

$76,000

SUV sold for $1 after a dealership’s AI chatbot was tricked into honoring a fake deal

1.2%

Of ChatGPT Plus users had their data-chats, names, even payment info exposed during OpenAI’s 2023 security breach

100,000+

Users had their private conversations leaked after an open-source LLM went live without strong deployment standard

$4.5M

Fine paid by a company for using sensitive data to train LLMs without consent reply

$1M+

In data losses hit Amazon after confidential info may have trained ChatGPT

$100M

Vanished from Alphabet’s market value after Google Bard messed up a simple fact about a telescope during a live demo

$76,000

SUV sold for $1 after a dealership’s AI chatbot was tricked into honoring a fake deal

1.2%

Of ChatGPT Plus users had their data-chats, names, even payment info exposed during OpenAI’s 2023 security breach

100,000+

Users had their private conversations leaked after an open-source LLM went live without strong deployment standard

$4.5M

Fine paid by a company for using sensitive data to train LLMs without consent reply

$1M+

In data losses hit Amazon after confidential info may have trained ChatGPT

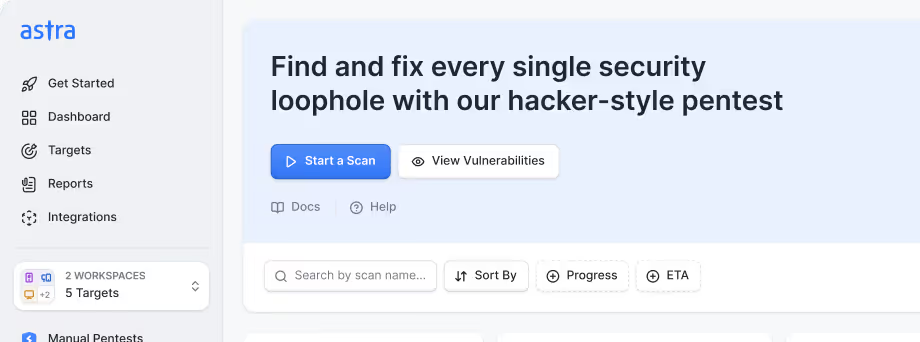

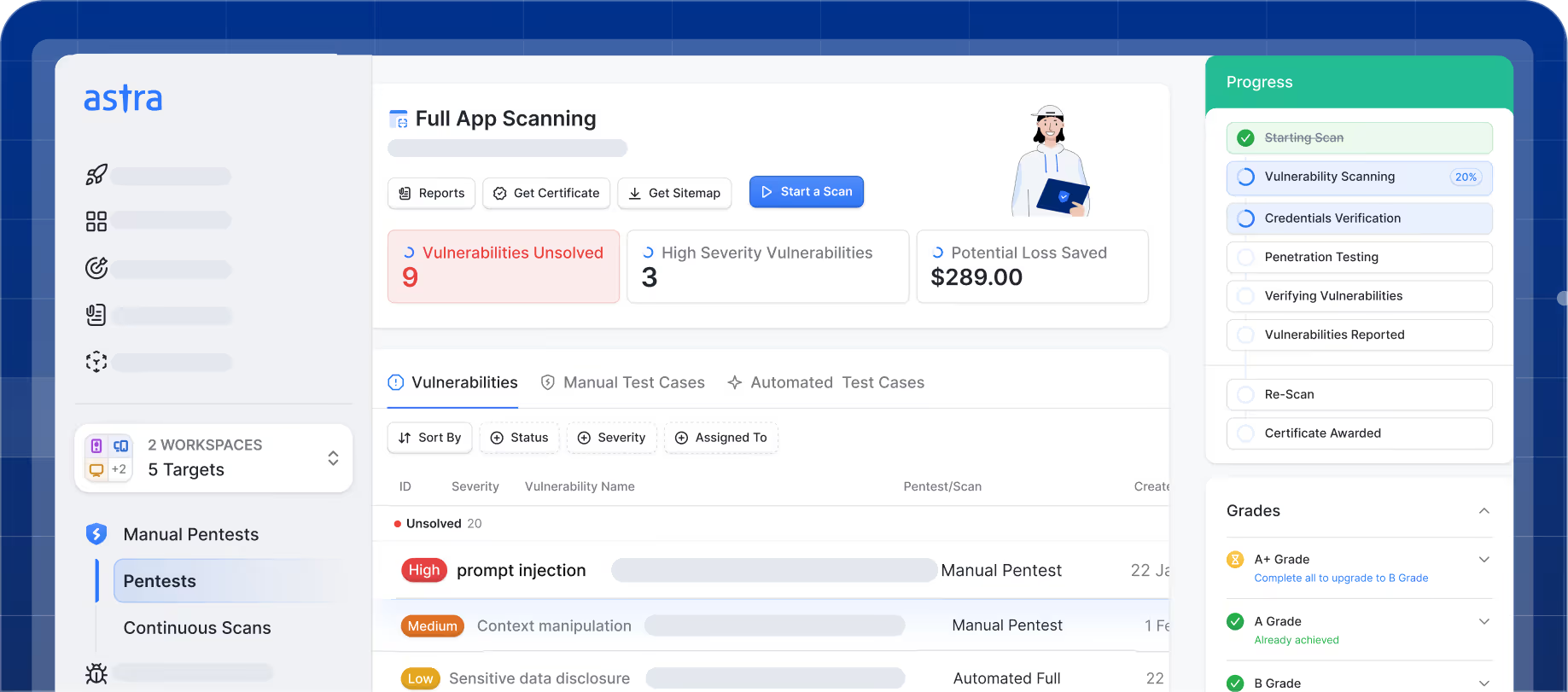

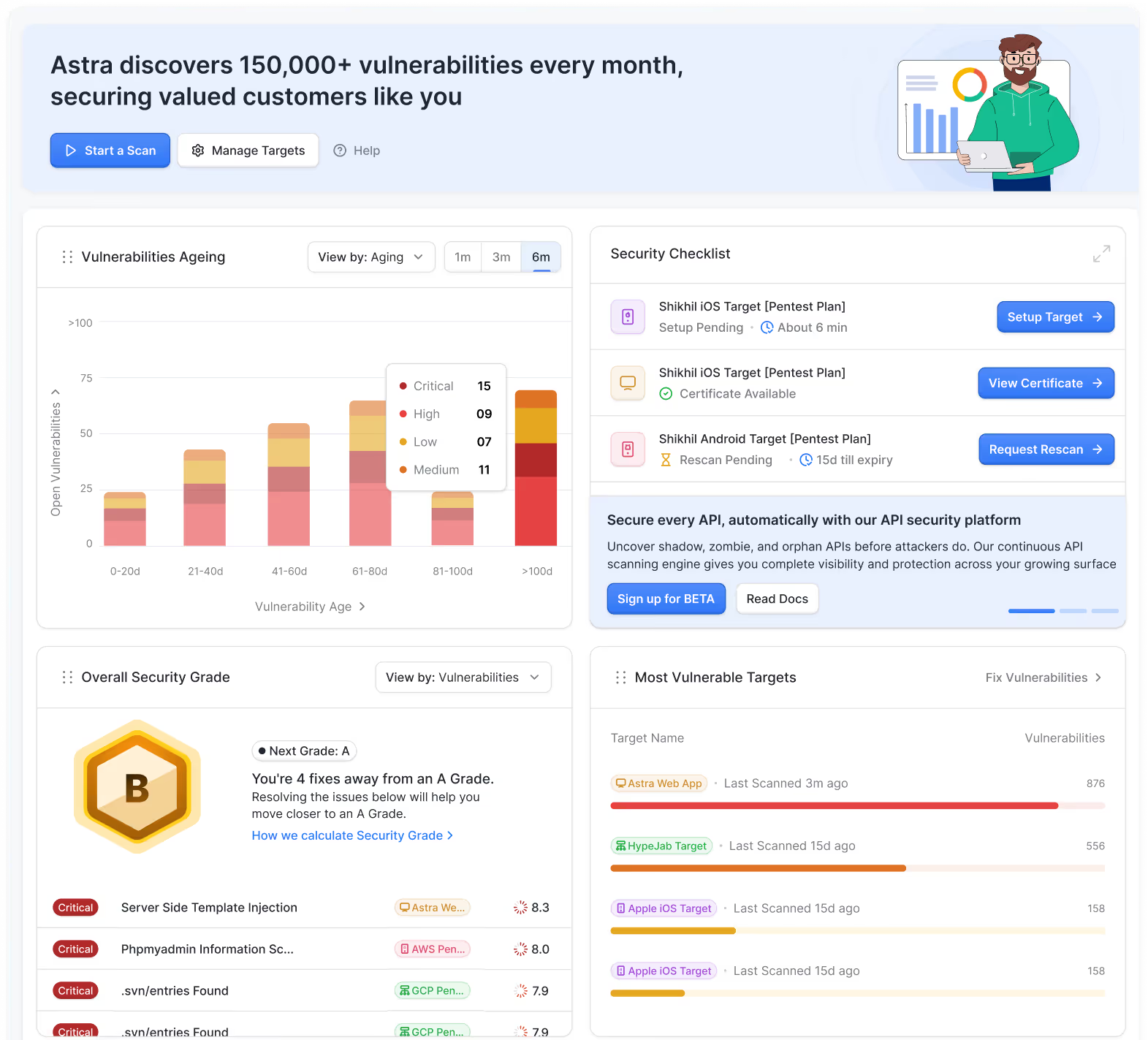

Astra’s one-of-a-kind Web Pentest Platform

turns your AI infrastructure into Fort Knox

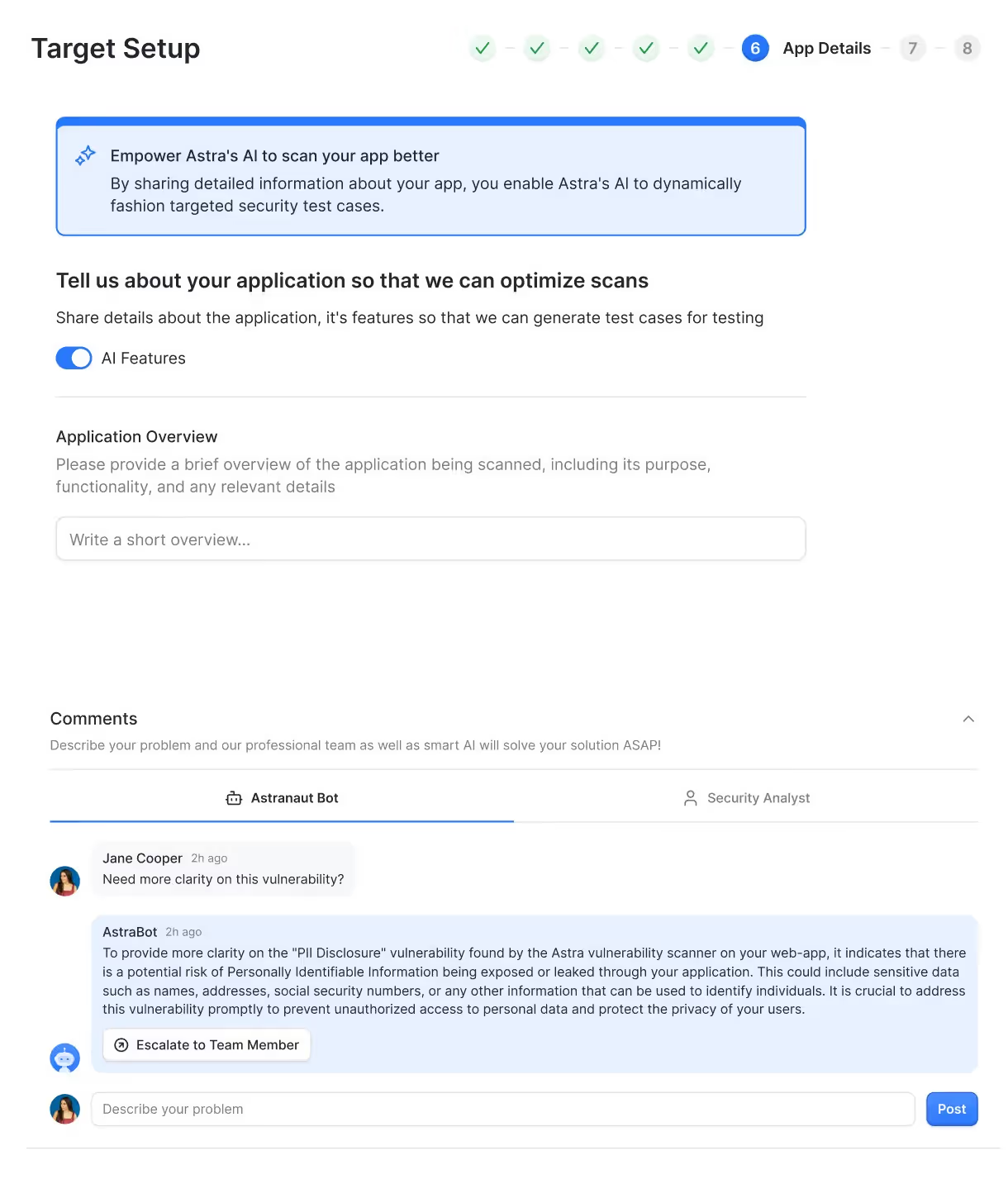

Setup & Onboarding

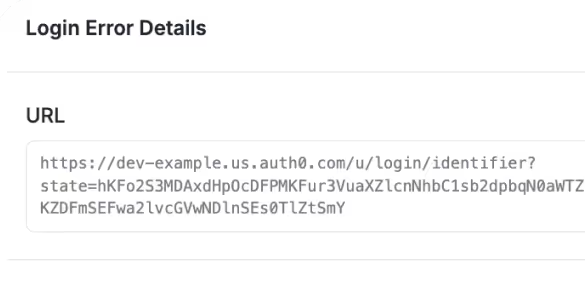

Go from sign-up to discovering vulnerabilities in minutes. Our self-serve onboarding accelerates AI application penetration testing while giving you support from your CSM whenever needed.

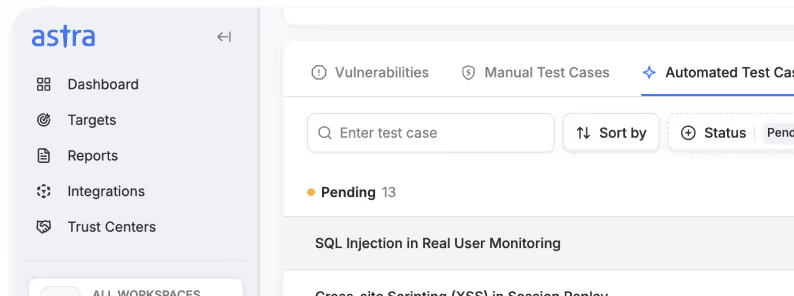

Manual Penetration Test

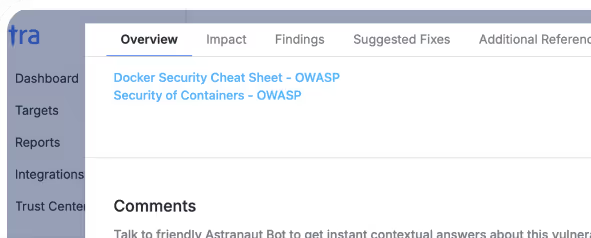

Identify threats and attack vectors with comprehensive manual and automated AI pentesting in 8-15 business days. Scrutinize data pipeline for data poisoning, prompt injection, model extraction, run bias tests, assess guardrails for emerging CVEs, extraction attacks, and authentication weaknesses for complete AI security testing.

Reporting & Remediation

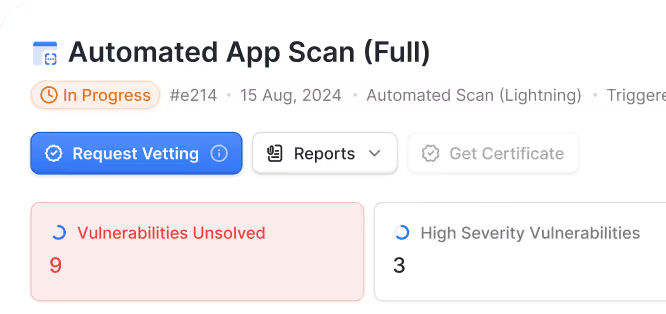

Improve your AI/ML security posture with actionable reports, video PoCs, and detailed steps to fix a vulnerability. Get two re-scans to validate fixes and Astra's publicly verifiable certificate once you pass the pentest.

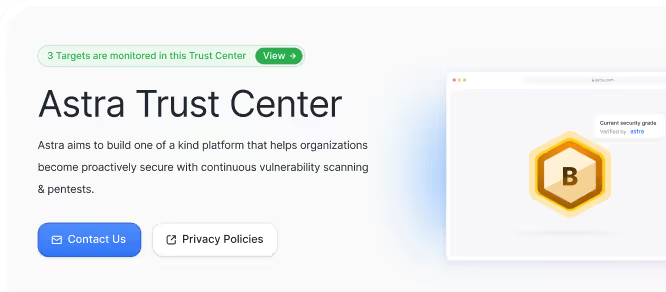

Pentest Certificate

Show off your security chops! Once we've validated your fixes, you'll receive Astra's publicly verifiable pentest certificate. It's like a security badge of honor for your AI systems, LLMs, MLOps pipelines, and MCP servers.

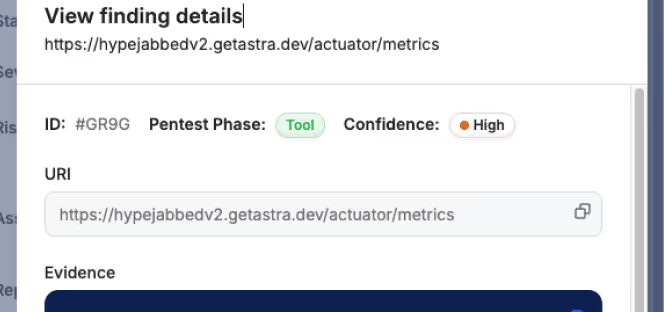

Continuous Pentesting

The security party doesn't stop! Keep your AI infrastructure safe 24/7 with our DAST scanner and API security platform. Use our PTaaS capabilities to continuously pentest every shiny new feature or LLM deployment, monitor continuously for regressions, emerging AI threats, and adversarial attacks.

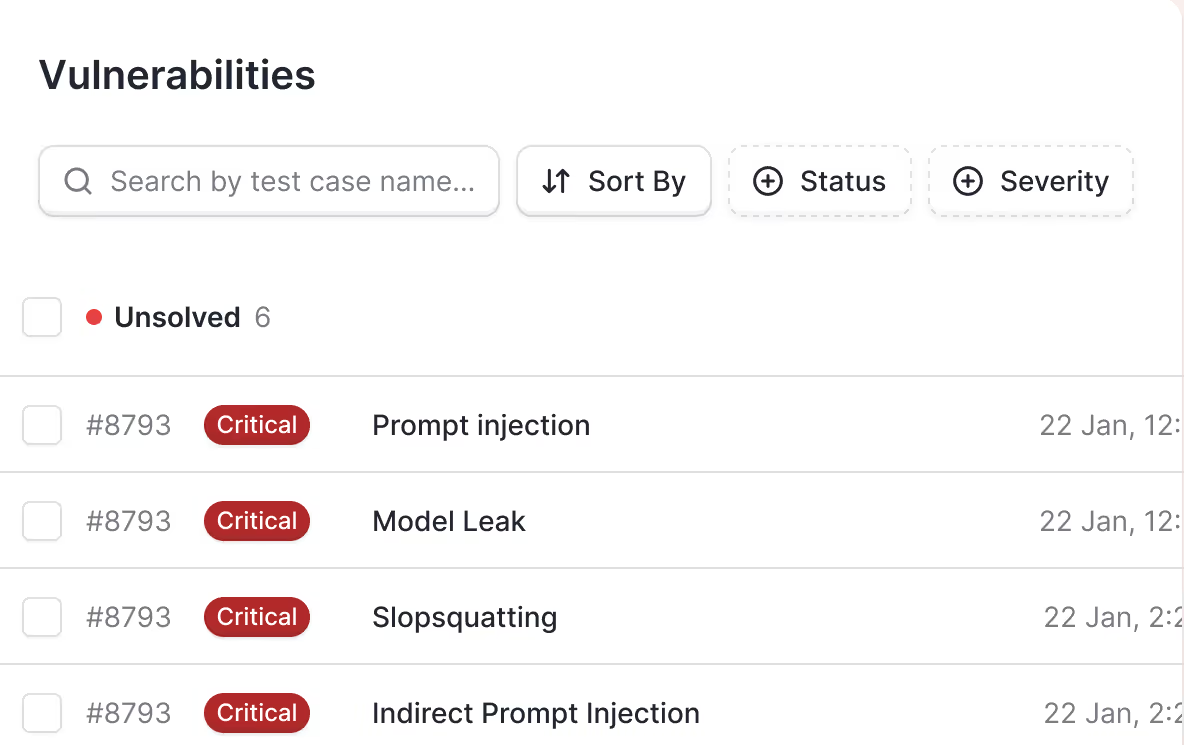

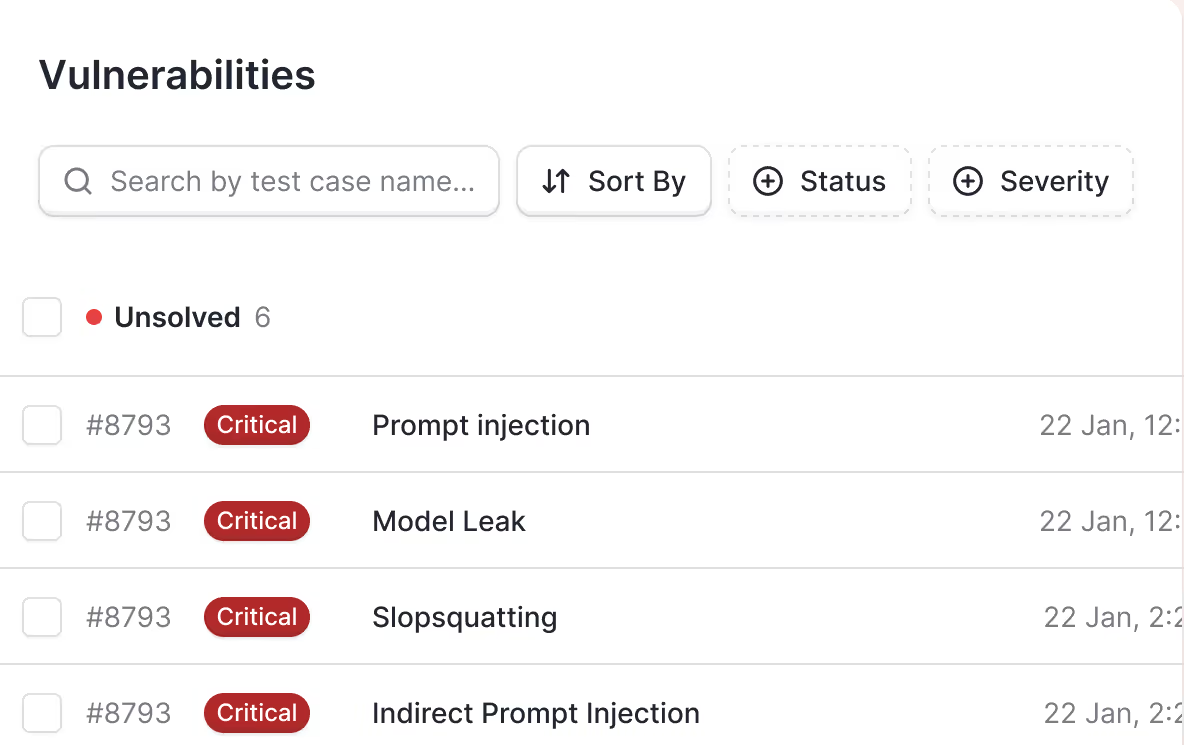

Don’t let AI application vulnerabilities rewrite your risk profile

Model manipulation

Adversarial inputs disrupt model behavior, resulting in incorrect decisions or reputational harm.

Model Data poisoning

Attackers manipulate training data to generate malicious outputs.

Context manipulation

Attackers exploit the memory of chat-based systems by crafting fake prior messages, altering how future responses are generated.

Permissive integrations

Third-party tools or model hubs may create unmonitored backdoors.

Leaky APIs

These new exposed endpoints are entry points to your IP, customer data, or model configurations.

Model manipulation

Adversarial inputs disrupt model behavior, resulting in incorrect decisions or reputational harm.

Model Data poisoning

Attackers manipulate training data to generate malicious outputs.

Context manipulation

Attackers exploit the memory of chat-based systems by crafting fake prior messages, altering how future responses are generated.

Permissive integrations

Third-party tools or model hubs may create unmonitored backdoors.

Leaky APIs

These new exposed endpoints are entry points to your IP, customer data, or model configurations.

Model manipulation

Adversarial inputs disrupt model behavior, resulting in incorrect decisions or reputational harm.

Model Data poisoning

Attackers manipulate training data to generate malicious outputs.

Context manipulation

Attackers exploit the memory of chat-based systems by crafting fake prior messages, altering how future responses are generated.

Permissive integrations

Third-party tools or model hubs may create unmonitored backdoors.

Leaky APIs

These new exposed endpoints are entry points to your IP, customer data, or model configurations.

Compliance gaps

Governance rules regulating AI are becoming stricter. Lack of controls could mean fines or funding loss.

Indirect Prompt Injection

LLMs can be manipulated through external content, like URLs, documents, or web pages, that sneak in hidden instructions to the model.

Model confusion

Ambiguous or recursive instructions can trick the model into conflicting logic loops, leading to unpredictable or biased behavior.

Jailbreak prompts

Role-playing, misdirection, and cleverly crafted prompts can bypass a model’s ethical boundaries and force it to generate harmful or restricted output.

Sensitive information leakage

LLMs may unintentionally expose internal system details or private training data, especially in debug modes or when exposed to probing inputs.

Compliance gaps

Governance rules regulating AI are becoming stricter. Lack of controls could mean fines or funding loss.

Indirect Prompt Injection

LLMs can be manipulated through external content, like URLs, documents, or web pages, that sneak in hidden instructions to the model.

Model confusion

Ambiguous or recursive instructions can trick the model into conflicting logic loops, leading to unpredictable or biased behavior.

Jailbreak prompts

Role-playing, misdirection, and cleverly crafted prompts can bypass a model’s ethical boundaries and force it to generate harmful or restricted output.

Sensitive information leakage

LLMs may unintentionally expose internal system details or private training data, especially in debug modes or when exposed to probing inputs.

Compliance gaps

Governance rules regulating AI are becoming stricter. Lack of controls could mean fines or funding loss.

Indirect Prompt Injection

LLMs can be manipulated through external content, like URLs, documents, or web pages, that sneak in hidden instructions to the model.

Model confusion

Ambiguous or recursive instructions can trick the model into conflicting logic loops, leading to unpredictable or biased behavior.

Jailbreak prompts

Role-playing, misdirection, and cleverly crafted prompts can bypass a model’s ethical boundaries and force it to generate harmful or restricted output.

Sensitive information leakage

LLMs may unintentionally expose internal system details or private training data, especially in debug modes or when exposed to probing inputs.

AI regulations are coming fast.

Is your security ready?

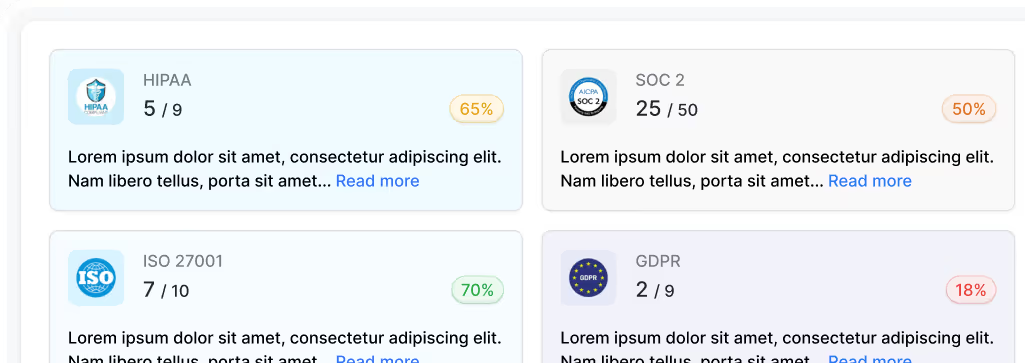

Astra’s pentests are designed to help you stay compliant with evolving AI security frameworks

EU AI Act

Tiered risk-based compliance mandates robust risk controls.

ISO/IEC 42001

AI Management System for responsible AI deployment.

NIST AI Risk Management Framework

Focuses on AI trustworthiness, bias, and resilience.

GDPR/CCPA

Data usage in training must remain privacy-compliant.

SOC 2 & HIPAA

AI platforms handling regulated data must prove security controls.

Astra's pentest platform keeps AI working for you, not against you

Attack scenarios we simulate

PSD2 & Open Banking

Information Disclosure

Plugin Abuse / Data Leakage

Interface Vulnerabilities

PSD2 & Open Banking

Information Disclosure

Plugin Abuse / Data Leakage

Interface Vulnerabilities

PSD2 & Open Banking

Information Disclosure

Plugin Abuse / Data Leakage

Interface Vulnerabilities

This forms the foundation for our offensive AI pentesting strategy and helps us surface the most impactful risks early

AI at the core. Smarter pentests, fewer headaches

AI-powered threat modeling

Auto-generates threat scenarios based on your app’s features and workflows for more relevant test cases.

Vulnerability resolution assistant

Our chatbot helps devs fix issues faster with contextual, app-specific guidance.

Smarter auth handling

Handles complex login flows, retries, and cookie prompts during scans with AI-driven logic.

Reduced false positives

AI validates findings to cut noise and highlight what actually matters.

Real-time scan decisions

Adapts scan strategies on the fly based on app behavior and structure.

AI-built Trust Center

Summarizes your security posture for easy sharing with customers and auditors

Our offensive, AI powered engine helps us build detections, discover & correlate vulnerabilities at scale

Astra Security is at the forefront of

AI pentest research

Why Astra Security?

Trusted by 1000+ businesses (150+ AI-first), 147K+ assets tested in 2024

engineers

Trust isn't claimed, it's earned

Astra meets global standards with accreditations from

Beyond AI pentesting, full-stack security coverage

Astra’s platform combines AI-aware pentests, automated DAST, and deep API security

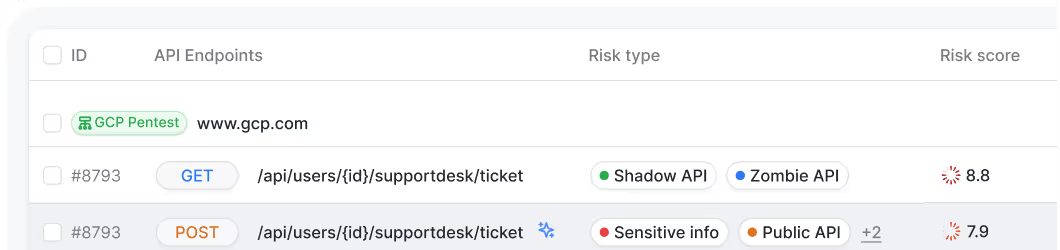

API Security Platform

Discovers all APIs—including shadow, zombie, and undocumented.

Deployed in minutes via Postman, traffic mirroring, or API specs.

Integrates with 8+ platforms like Kong, Azure, AWS, Apigee & more.

Get full API visibility and scan results in under 30 mins.

15,000+ DAST tests, OWASP API Top 10 coverage, and runtime risk classification.

Upload OpenAPI specs to tailor scans to your environment.

Continuous Pentesting (PTaaS)

Manual + automated pentests with 15,000+ evolving test cases beyond OWASP & PTES

Hacker-style testing to catch logic flaws & payment bypasses automation misses

Gray & black box pentesting tailored to requirements

Zero false positives, every finding is human-verified

Certified in-house experts (OSCP, CEH, eWPTXv2)

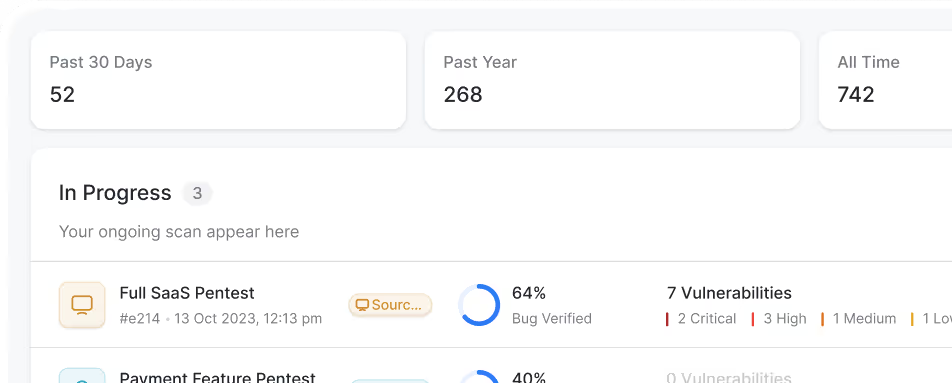

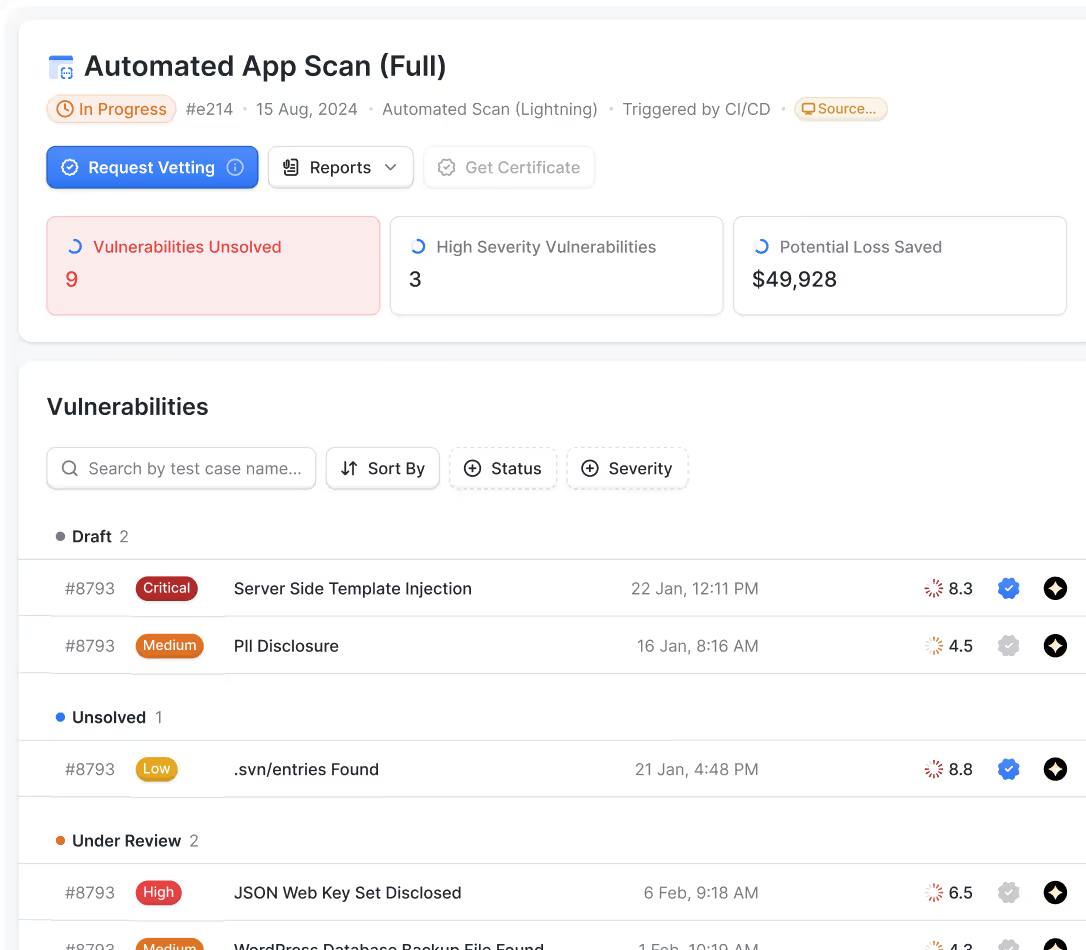

AI-powered dashboard to manage and scale pentests

DAST Vulnerability Scanner

15,000+ tests covering OWASP Top 10, CVEs, and access control flaws.

Authenticated, zero false positive scans with continuous monitoring.

Vulnerabilities mapped to compliances like ISO 27001, HIPAA, SOC 2, GDPR.

Detailed vulnerability reports with impact, severity, CVSS score, and $ loss.

Continuously improves by learning from manual pentests.

Upload OpenAPI specs to tailor scans to your environment.

Trusted by security teams working on AI

Astra secures AI-first companies that

handle billions of dollars in data,

predictions, and decisions.

Loved by 1000+ CTOs & CISOs worldwide

We are impressed by Astra's commitment to continuous rather than sporadic testing.

Astra not only uncovers vulnerabilities proactively but has helped us move from DevOps to DevSecOps

Their website was user-friendly & their continuous vulnerability scans were a pivotal factor in our choice to partner with them.

The combination of pentesting for SOC 2 & automated scanning that integrates into our CI pipelines is a game-changer.

I like the autonomy of running and re-running tests after fixes. Astra ensures we never deploy vulnerabilities to production.

We are impressed with Astra's dashboard and its amazing ‘automated and scheduled‘ scanning capabilities. Integrating these scans into our CI/CD pipeline was a breeze and saved us a lot of time.

We are impressed by Astra's commitment to continuous rather than sporadic testing.

Astra not only uncovers vulnerabilities proactively but has helped us move from DevOps to DevSecOps

Their website was user-friendly & their continuous vulnerability scans were a pivotal factor in our choice to partner with them.

The combination of pentesting for SOC 2 & automated scanning that integrates into our CI pipelines is a game-changer.

I like the autonomy of running and re-running tests after fixes. Astra ensures we never deploy vulnerabilities to production.

We are impressed with Astra's dashboard and its amazing ‘automated and scheduled‘ scanning capabilities. Integrating these scans into our CI/CD pipeline was a breeze and saved us a lot of time.

Frequently asked questions

What is AI penetration testing?

Can Astra test LLMs and generative AI systems?

Yes. Astra supports LLM & Gen AI application penetration testing, including prompt injection, context hijacking, output manipulation, and misuse scenarios

Will pentesting slow down our deployments?

Not at all. Astra integrates seamlessly into your CI/CD workflows with zero downtime testing.

Do you cover compliance requirements for AI?

We align your security posture with frameworks like the EU AI Act, ISO 42001, GDPR, and more.

Do you provide a certificate post-test?

Yes, a publicly verifiable certificate and detailed report are included after every test.

Why do businesses need AI pentesting services?

Businesses need AI pentesting because it blends continuous automated scans with manual testing to uncover real-world vulnerabilities, prioritize fixes, provide contextual remediation (including video PoCs), and deliver audit-ready evidence to reduce noise, speed-up resolution, and protect releases and compliance.

How are Astra’s AI penetration testing services different from automated tools?

Astra’s AI pentesting pairs automation driven breadth with certified manual depth including logic tests plubys OSCP/CEH-led manual pentests, vetted zero-false-positive comprehensive reports, AI-augmented test cases, video PoCs, two free rescans, and real-time expert support.

How long does an AI/LLM systems penetration testing take?

The duration typically ranges from 10 to 15 business days, depending on scope, complexity, and assets tested. This timeline covers automated scanning, manual validation, and delivery of comprehensive, audit-ready reports with prioritized remediation guidance.

How much do AI penetration testing services cost?

AI pentesting services typically range from $5,000 to $50,000+, depending on scope, complexity, and assets tested. Astra engagements include automated scans, manual pentests, two free rescans, and certification, where tailored pricing ensures coverage for both compliance-driven and large-scale security needs.